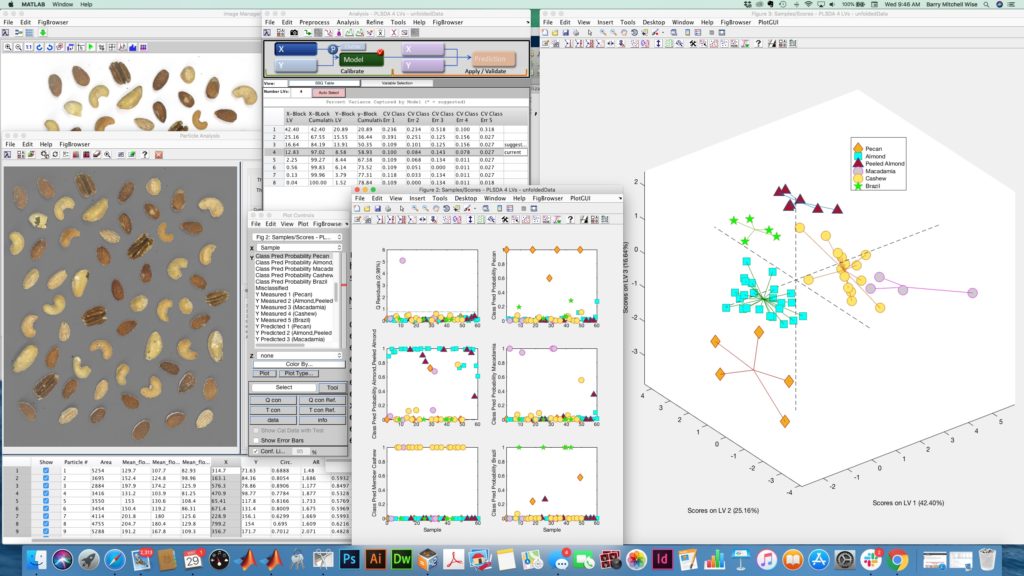

The development of a machine learning model is typically a fairly involved process, and the software for doing this commensurately complex. Whether it be a Partial Least Squares (PLS) regression model, Artificial Neural Network (ANN) or Support Vector Machine (SVM), there are a lot of calculations to be made to parameterize the model. These include everything from calculation of projections, matrix inverses and decompositions, computing fit and cross-validation statistics, optimization, you name it, it’s in there. Lots of loops and logic and checking convergence criteria, etc.

On the other hand, the application of these models, once developed, is typically quite straight forward. Most models can be applied to new data using a fairly simple recipe involving matrix multiplications, scalings, projections, activation functions, etc. There are exceptions, such as preprocessing methods like iterative Weighted Least Squares (WLS) baselining and models like Locally Weighted Regression (LWR) where you really don’t have a model per se, you have a data set and a procedure. (More on WLS and LWR in a minute!) But in the vast majority of cases effective models can be developed using methods whose predictions can be reduced to simple formulas.

On the other hand, the application of these models, once developed, is typically quite straight forward. Most models can be applied to new data using a fairly simple recipe involving matrix multiplications, scalings, projections, activation functions, etc. There are exceptions, such as preprocessing methods like iterative Weighted Least Squares (WLS) baselining and models like Locally Weighted Regression (LWR) where you really don’t have a model per se, you have a data set and a procedure. (More on WLS and LWR in a minute!) But in the vast majority of cases effective models can be developed using methods whose predictions can be reduced to simple formulas.

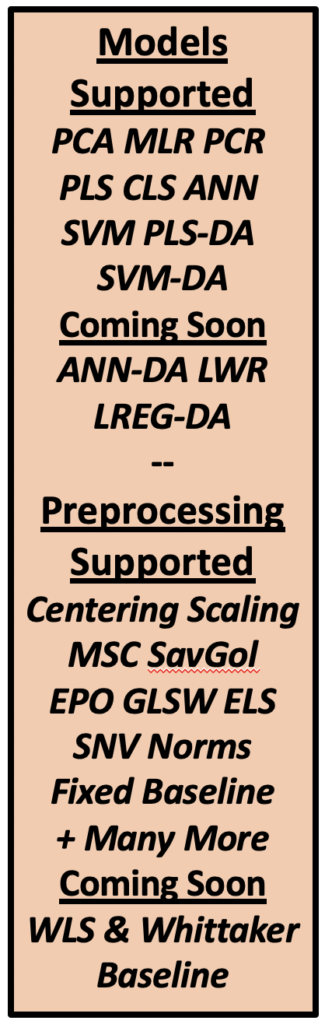

Enter Model_Exporter. When you create any of the models shown at right (key to acronyms below) in PLS_Toolbox or Solo, Model_Exporter can take that model and create a numerical recipe for applying it to new data, including the supported preprocess steps. This recipe can be output in a number of formats, including MATLAB .m, Python .py or XML. And using our freely available Model_Interpreter, the XML file can be incorporated into Java, Microsoft .NET, or generic C# environments.

So what does all this mean?

- Total model transportability. Models can be built into any framework you need them in, from process control systems to hand-held analytical instruments.

- Minimal footprint. Exported model also have a very small footprint and minimal computing overhead. This means that they can be made to run with minimal memory and computing power.

- Order of magnitude faster execution. Lightweight recipe produces predictions much faster than the original model.

- Complete transparency. There’s no guessing as to exactly how the model gets from measurements to predictions, it’s all there.

- Simplified model validation. Don’t validate the code that makes the model, validate the model!

This is why our customers in many industries, from analytical instrument developers to the chemical process industries, are getting their models online using Model_Exporter. It is creating a revolution in how online models are generated and executed.

And what about those cases like WLS and LWR noted above? We’re working to create add-ons so exported models can utilize these functions too. Look for them, along with some additional model types, in the next release.

Is it for everybody? Well not quite. There are still times where you need a full featured prediction engine like our Solo_Predictor that has built in communication protocols (e.g. socket connections), scripting ability, and can run absolutely any model you can make in PLS_Toolbox or Solo (like hierarchical and even XGBoost). But we’re seeing more and more instances of companies utilizing the advantages of Model_Exporter.

Join the Model_Exporter revolution for the compact, efficient and seamless application of your machine learning models!

BMW

Models Supported: Principal Components Analysis (PCA), Multiple Linear Regression (MLR), Principal Components Regression (PCR), Partial Least Squares Regression (PLS), Classical Least Squares (CLS), Artificial Neural Networks (ANN), Support Vector Machine Regression (SVM), Partial Least Squares Discriminant Analysis (PLS-DA), Support Vector Machine Discriminant Analysis (SVM-DA), Artificial Neural Network Discriminant Coming Soon: Analysis (ANN-DA), Locally Weighted Regression (LWR), Logistic Regression Discriminant Analysis (LREG-DA).

Preprocessing Methods Supported: General scaling and centering options including Mean and Median Centering, Autoscaling, Pareto and Poisson Scaling, Multiplicative Scatter Correction (MSC), Savitsky-Golay Smoothing and Derivatives, External Parameter Orthogonalization (EPO), Generalized Least Squares Weighting (GLSW), Extended Least Squares, Standard Normal Variate (SNV), 1-, 2-, infinity and n-norm Normalization, Fixed point spectral baselining. Coming Soon: Iterative Weighted Least Squares Baselining (WLS) and Whittaker Baslining.

SEARCH

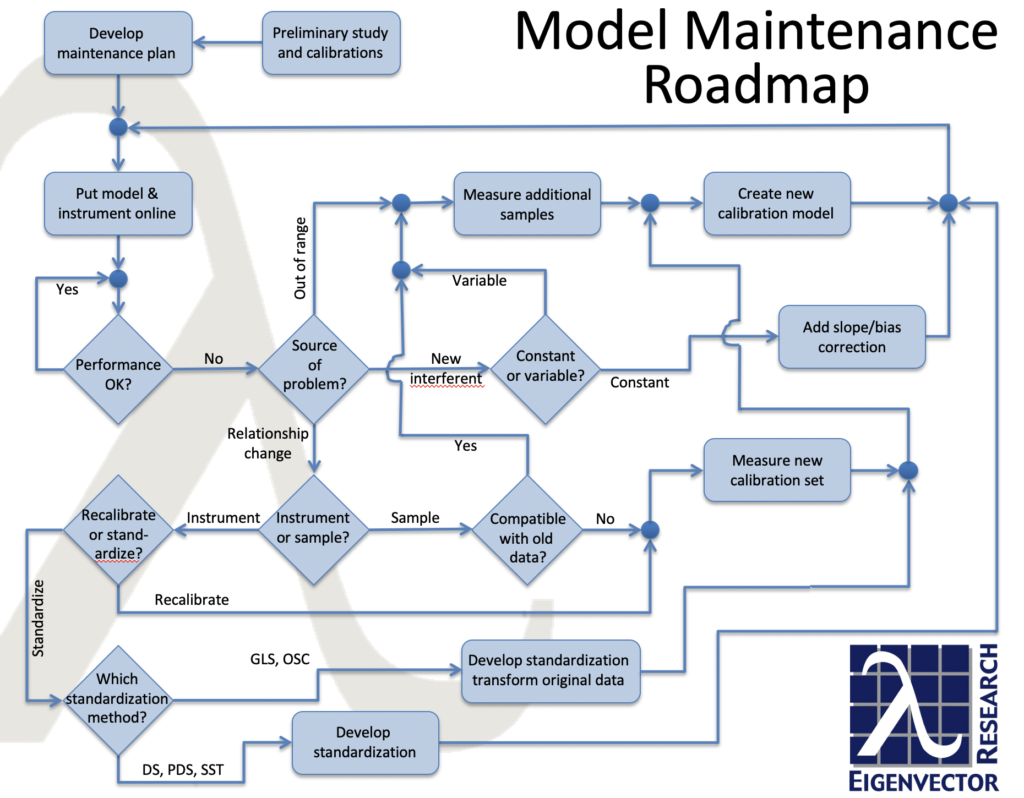

SEARCH I’d like to draw special attention to the Calibration Model Maintenance course. It’s an unfortunate fact that most multivariate data-derived models do not last forever. There are many things that can change (e.g. instrument behavior, feed stream compositions, etc.) and cause model outputs to be biased or noisy. Worse still, in many instances no plan has been developed to deal with this reality. In Calibration Model Maintenance we’ll go over our Roadmap (at right) and also cover methods and tools (such as instrument standardization/calibration transfer methods and methods to detect model output drift) that can be used as part of an overall model maintenance strategy.

I’d like to draw special attention to the Calibration Model Maintenance course. It’s an unfortunate fact that most multivariate data-derived models do not last forever. There are many things that can change (e.g. instrument behavior, feed stream compositions, etc.) and cause model outputs to be biased or noisy. Worse still, in many instances no plan has been developed to deal with this reality. In Calibration Model Maintenance we’ll go over our Roadmap (at right) and also cover methods and tools (such as instrument standardization/calibration transfer methods and methods to detect model output drift) that can be used as part of an overall model maintenance strategy.

Many of our software users are among these new homeworkers and we are working to accommodate them. In particular, users that work with our floating license versions of

Many of our software users are among these new homeworkers and we are working to accommodate them. In particular, users that work with our floating license versions of

Oh boy. Déjà vu. In the late 80s and 90s during the first Artificial Neural Network (ANN) wave there were a slew of companies making similar promises about the value they could extract from data, particularly historical/happenstance process data that was “free.” One slogan from the time was “Turn your data into Gold.” It was the new alchemy. There were successful applications but there were many more failures. The hype eventually faded. One of biggest lessons learned: Garbage In, Garbage Out.

Oh boy. Déjà vu. In the late 80s and 90s during the first Artificial Neural Network (ANN) wave there were a slew of companies making similar promises about the value they could extract from data, particularly historical/happenstance process data that was “free.” One slogan from the time was “Turn your data into Gold.” It was the new alchemy. There were successful applications but there were many more failures. The hype eventually faded. One of biggest lessons learned: Garbage In, Garbage Out.