Category Archives: Consulting

We used to call it “Chemometrics”

Feb 23, 2022

The term chemometrics was coined by Svante Wold in a grant application he submitted in 1971 while at the University of Umeå. Supposedly, he thought that creating a new term, (in Swedish it is ‘kemometri’), would increase the likelihood of his application being funded. In 1974, while on a visit to the University of Washington, Svante and Bruce Kowalski founded the International Chemometrics Society over dinner at the Casa Lupita Mexican restaurant. I’d guess that margaritas were involved. (Fun fact: I lived just a block from Casa Lupita in the late 70s and 80s.)

Chemometrics is a good word. The “chemo” part of course refers to chemistry and “metrics” indicates that it is a measurement science: a metric is a meaningful measurement taken over a period of time that communicates vital information about a process or activity, leading to fact-based decisions. Chemometrics is therefore measurement science in the area of chemical applications. Many other fields have their metrics: econometrics, psychometrics, biometrics. Chemical data is also generated in many other fields including biology, biochemistry, medicine and chemical engineering.

So chemometrics is defined as the chemical discipline that uses mathematical, statistical, and other methods employing formal logic to design or select optimal measurement procedures and experiments, and to provide maximum relevant chemical information by analyzing chemical data.

In spite of being a nearly perfect word to capture what we do here at Eigenvector, there are two significant problems encountered when using the term Chemometrics: 1) In spite of the existence of the field for nearly five decades and two dedicated journals (Journal of Chemometrics and Chemometrics and Intelligent Laboratory Systems), the term is not widely known. I still run into graduates of chemistry programs who have never heard the term, and of course it is even less well known in the related disciplines, and less yet in the general population. 2) Many that are familiar with the term think it refers to a collection of primarily projection methods, (e.g. Principal Components Analysis (PCA), Partial Least Squares Regression (PLS)), and therefore other Machine Learning (ML) methods (e.g. Artificial Neural Networks (ANN), Support Vector Machines (SVM)) are not chemometrics regardless of where they are applied. Problem number 2 is exacerbated by the current Artificial Intelligence (AI) buzz and the proclivity of managers and executives towards things that are new and shiny: “We have to start using AI!”

This wouldn’t matter much if choosing the right terms wasn’t so critical to being found. Search engines pretty much deliver what was asked for. So you have to be sure you are using terms that are actually being searched on. So what to use?

A common definition of artificial intelligence is the theory and development of computer systems able to perform tasks that normally require human intelligence. This is a rather low bar. Many of the models we develop make better predictions than humans could to begin with. But AI is generally associated with problems such as visual perception and speech recognition, things that humans are particularly adept at. These AI applications generally require very complex deep neural networks etc. And so while you could say we do AI this feels like too much hyperbole, and certainly there are other arguments against using this term loosely.

Machine learning is the use and development of computer systems that are able to learn and adapt without following explicit instructions, by using algorithms and statistical models to analyze and draw inferences from patterns in data. Most researchers (apparently) view ML as a subset of AI. Do a search on “artificial intelligence machine learning images” and you’ll find many Venn diagrams illustrating this. I tend to see it as the other way around: AI is the subset of ML that uses complex models to address problems like visual perception. I’ve always had a problem with the term “learning” as it anthropomorphizes data models: they don’t learn, they are parameterized! (If these models really do learn I’m forced to conclude that I’m just a machine made out of meat.) In any case, models from Principal Components Regression (PCR) through XGBoost are commonly considered ML models, so certainly the term machine learning applies to our software.

Process analytics is a much less used term and particular to chemical process data modeling and analysis. There are however conferences and research centers that use this term in their name, e.g. IFPAC, APACT and CPACT. Cheminformatics sounds relevant to what we do but in fact the term refers to the use of physical chemistry theory with computer and information science techniques in order to predict the properties and interactions of chemicals.

Data science is defined as the field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from data. Certainly this is what we do at Eigenvector, but of course primarily in chemistry/chemical engineering where we have a great deal of specific domain knowledge such as the fundamentals of spectroscopy, chemical processes, etc. Thus the term chemical data science describes us pretty well.

So you will find that we will use the terms Machine Learning and Chemical Data Science a lot in the future though we certainly will continue to do Chemometrics!

BMW

Sociopaths, Enablers and Due Diligence

Apr 15, 2020

I just finished reading “Bad Blood: Secrets and Lies in a Silicon Valley Startup” by John Carreyrou. It is the story of Theranos and its founder Elizabeth Holmes. Our Bob Roginski sent it to me after I mentioned that I’d seen the HBO documentary “The Inventor: Out for Blood in Silicon Valley.” I highly recommend the book over the much shorter documentary.

I’ve followed this story a bit since first hearing of Theranos and their claims to be able to run hundreds of blood tests simultaneously on just a few drops of blood. Based on my experience this seemed more than unlikely. At Eigenvector we’ve worked on quite a few medical device development projects. This includes projects involving atherosclerotic plaques, cervical cancer, muscle tissue oxygenation, burn wound healing, limb ischemia, non-invasive glucose monitoring, non-invasive blood alcohol estimation and numerous other projects involving blood and urine tests. So we’ve developed an appreciation of how hard it is to develop new analytical techniques on biological samples. Beyond that, we’ve also learned a lot about the error in the reference methods we were trying to match. Even under ideal conditions, with standard laboratory equipment and large sample volumes, results are far from perfect.

So when the whole thing was blown wide open by Carreyrou’s reports in the Wall Street Journal I wasn’t surprised. I read several of the follow up articles as well. But as one reviewer of the book said, “No matter how bad you think the Theranos story was, you’ll learn that the reality was actually far worse.” I’ll say. Honestly it took me a while to get into the book, in fact, I put it down for a month because it just made me so mad.

We’ve had a few consulting clients over the years that were, let’s say, overly enthusiastic. To varying degrees some of them have been unrealistic about the robustness of their technology and have failed to address problems that could potentially impact accuracy. (I’m happy to report that none of our current consulting clients fall into this category.) In some instances things we saw as potential show stoppers were simply declared non-problems. In other cases people abused the data including cherry picking and grossly overfit and non-validated models. (My favorite line was when one client’s lawyer told me I didn’t know how to use my own software.) We have had falling outs with some of these folks when our analysis didn’t support their contentions.

But none of the people we’ve dealt with approached the level of overselling their technology to the degree that Holmes took it. As I see it there are two reasons for this. The first is that Holmes is a sociopath. Carreyrou said he would leave it to others to make that assessment but it seems obvious to me. Maybe she didn’t start out that way, but it’s clear that very early on she started believing her own bullshit. Defending that belief became all that mattered. And she teamed with Ramesh “Sunny” Balwani who was if anything worse. They ran an organization that was based on secrecy, lies and intimidation. And they made sure that nobody on their board had the scientific background to question the feasibility of what they were claiming they’d do.

But the second reason they got as far as they did was because they were exceedingly well connected. The book identifies these connections but doesn’t really discuss them in terms of being enablers of the scam that ensued. It started with Elizabeth’s parents connections to people around Stanford, and Elizabeth’s ChemE professor at Stanford, Channing Robertson. These lead to funding and legal help. From there Holmes just played leapfrog with these connections ending at former secretary of State George Shultz, (who I learned actually lives on the Stanford campus) and his circle including Henry Kissinger, James Mattis and high profile lawyer David Boies. (Boies led the Justice Department’s anti-trust suit against Microsoft, and was Al Gore’s lawyer in he 2000 election.) Famous for his scorched earth tactics, Boies and his firm kept the lid on things at Theranos by threatening lawsuits against potential whistleblowers and further intimidating them by hiring private eyes to surveil them. Having no firmer grasp of the science and engineering realities of what Theranos was attempting than the board members, Boies’ firm did this in exchange for stock options.

It’s second point here that really bothers me. There will always be people like Holmes that are willing to ignore the damage that they may do to others while pursuing wealth or fame and fortune. But this behavior was enabled by well-heeled and well-connected people that failed completely on their due diligence obligations from financial, scientific and most importantly, medical ethics perspectives. Somehow they completely forgot Carl Sagan’s adage “extraordinary claims require extraordinary evidence.” It’s hard to imagine that this could have ever gotten so far out of hand had Holmes attended a state university and had unconnected parents. Investors to whom you are not connected and who are not so wealthy as to be able to afford to lose a lot of money have a much higher standard of proof.

In our consulting capacity at Eigenvector we always try to be optimistic about what’s possible, and we do our best to help clients achieve success. But we never pull our punches with regards to the limitations of the technology we’re working with and the models we develop based on the data it produces. Theranos produced millions of inaccurate blood tests that were eventually vacated. While it doesn’t appear that anybody actually died because of these inaccurate tests, they certainly caused a lot of anxiety, lost time and expense among the customers. It’s our pledge that we will always do our due diligence, and expect those around us to do the same, so that Eigenvector will never be part of a fiasco like this.

BMW

Domain Knowledge and the New “Turn Your Data Into Gold” Rush

Jan 29, 2020

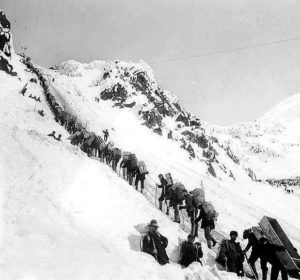

A colleague wrote to me recently and asked if Eigenvector was considering rebranding itself as a Data Science company. My knee-jerk response was “isn’t that what we’ve been for the last 25 years?” But I know exactly what she meant: few people have heard of Chemometrics but everybody has heard about Data Science. She went on to say “I am spending increasing amounts of time calming over-excited people about the latest, new Machine Learning (ML) and Artificial Intelligence (AI) company that can do something slightly different and better…” I’m not surprised. I know it’s partly because Facebook and LinkedIn have determined that I have an interest in data science, but my feeds are loaded with ads for AI and ML courses and data services. I’m sure many managers subscribe to the Wall Street Journal’s “Artificial Intelligence Daily” and, like the Stampeders on Chilkoot Pass pictured below, don’t want to miss out on the promised riches.

Oh boy. Déjà vu. In the late 80s and 90s during the first Artificial Neural Network (ANN) wave there were a slew of companies making similar promises about the value they could extract from data, particularly historical/happenstance process data that was “free.” One slogan from the time was “Turn your data into Gold.” It was the new alchemy. There were successful applications but there were many more failures. The hype eventually faded. One of biggest lessons learned: Garbage In, Garbage Out.

Oh boy. Déjà vu. In the late 80s and 90s during the first Artificial Neural Network (ANN) wave there were a slew of companies making similar promises about the value they could extract from data, particularly historical/happenstance process data that was “free.” One slogan from the time was “Turn your data into Gold.” It was the new alchemy. There were successful applications but there were many more failures. The hype eventually faded. One of biggest lessons learned: Garbage In, Garbage Out.

I attended The MathWorks Expo in San Jose this fall. In his keynote address, “Beyond the ‘I’ in AI,” Michael Agostini stated that 80-90% of the current AI initiatives are failing. The main reason: lack of domain knowledge. He used as an example the monitoring of powdered milk plants in New Zealand. The moral of the story: you can’t just throw your data into a ML algorithm and expect to get out anything very useful. Perhaps tellingly, he showed plots from Principal Components Analysis (PCA) that helped the process engineers involved diagnose the problem, leading to a solution.

Another issue involves what sort of data is even appropriate for AI/ML applications. In the early stages of the development of new analytical methods, for instance, it is common to start with tens or hundreds of samples. It’s important to learn from these samples so you can plan for additional data collection: that whole experimental design thing. And in the next stage you might get to where you have hundreds to thousands of samples. The AI/ML approach is of limited usefulness In this domain. First off it is hard to learn much about the data using these approaches. And maintaining parsimony is challenging. Model validation is paramount.

The old adage “try simple things first” is still true. Try linear models. Use your domain knowledge to select sample sets and variables, and to select data preprocessing methods that remove extraneous variance from the problems. Think about what unplanned perturbations might be affecting your data. Plan on collecting additional data to resolve modeling issues. The opposite of this approach is what we call the “throw the data over the wall” model where people doing the data modeling are separate from the people who own the data and the problem associated with it. Our experience is that this doesn’t work very well.

There are no silver bullets. In 30 years of doing this I have yet to find an application where one and only one method worked far and away better than other similar approaches. Realize that 98% of the time the problem is the data.

So is Eigenvector going to rebrand itself as a Data Science company? We certainly want people to know that we are well versed in the application of modern ML methods. We have included many of these tools in our software for decades, and we know how to work with these methods to obtain the best results possible. But we prefer to stay grounded in the areas where we have domain expertise. This includes problems in spectroscopy, analytical chemistry, chemical process monitoring and control. We all have backgrounds in chemical engineering, chemistry, physics, etc. Plus collectively over 100 man-years experience developing solutions that work with real data. We know a tremendous amount about what goes wrong in data modeling and what approaches can be used to fix it. That’s where the gold is actually found.

BMW

Eigenvector Turns 25

Jan 1, 2020

Eigenvector Research, Inc. was founded on January 1, 1995 by myself and Neal B. Gallagher, so we’re now 25 years old. On this occasion I feel that I should write something though I’m at a bit of loss with regards to coming up with a significantly profound message. In the paragraphs below I’ve written a bit of history (likely overly long).

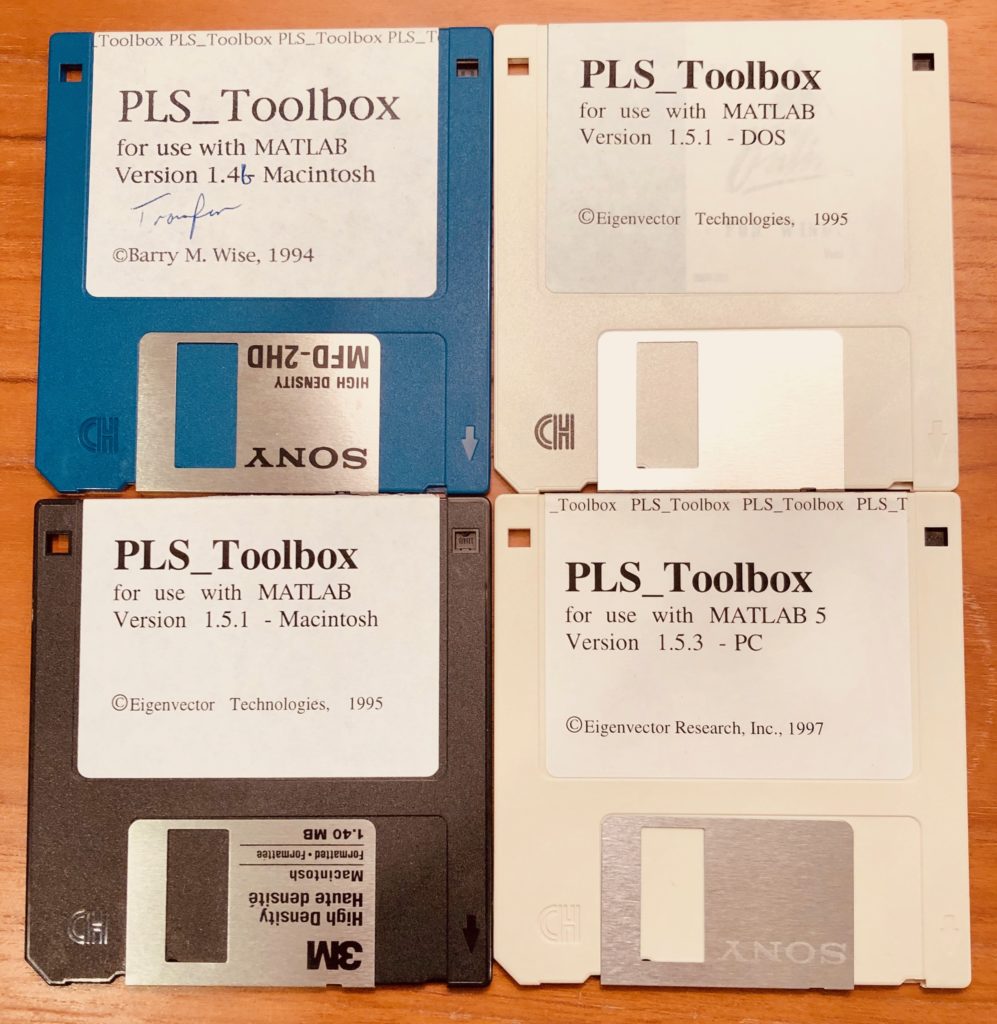

We started Eigenvector with each of us buying a Power Mac 8100 with keyboard, mouse and monitor. These were about $4k, plus another $1700 to upgrade the 8Mb RAM it came with to 32Mb. Liz Callanan at The MathWorks gave us our first MATLAB licenses-thanks! PLS_Toolbox was in version 1.4 and still being marketed under Eigenvector Technologies. Our founding principle was and still is:

Life is too short to drink bad beer, do boring work or live in a crappy place.

That’s a bit tongue-in-cheek but it’s basically true. We certainly started Eigenvector to keep ourselves in interesting work. For me that meant continuing with chemometrics, data analysis in chemistry. New data sets are like Christmas presents, you never know what you’ll find inside. For Neal I think it meant anything you could do that let you use math on a daily basis. Having both grown up in rural environments and being outdoor enthusiasts location was important. And the bit about beer is just, well, duh!

As software developers we found it both interesting and challenging to make tools that allowed users (and ourselves!) to build successful models for calibration, classification, MSPC etc. As consultants we found a steady stream of projects which required both use of existing chemometric methods and adaptation of new ones. As we became more experienced we learned a great deal about what can make models go bad: instrument drift, differences between instruments, variable and unforeseen background interferents, etc. and often found ourselves as the sanity check to overly optimistic instrument and method developers. Determining what conclusions are supportable given the available data remains an important function for us.

Our original plan included only software and consulting projects but we soon found out that there was a market for training. (This seems obvious in retrospect.) We started teaching in-house courses when Pat Wiegand asked us to do one at Union Carbide in 1996. A string of those followed and soon we were doing workshops at conferences. And then another of our principles kicked in:

Let’s do something, even if it’s wrong.

Entrepreneurs know this one well. You can never be sure that any investment you make in time or dollars is actually going to work. You just have to try it and see. So we branched out into doing courses at open sites with the first at Illinois Institute of Technology (IIT) in 1998, thanks for the help Ali Çinar! Open courses at other sites followed. Eigenvector University debuted at the Washington Athletic Club in Seattle in 2006. We’re planning the 15th Annual EigenU for this spring. The 10th Annual EigenU Europe will be in France in October and our third Basic Chemometrics PLUS in Tokyo in February. I’ve long ago lost count of the number of courses we’ve presented but it has to be well north of 200.

Our first technical staff member, Jeremy M. Shaver, joined us in 2001 and guided our software development for over 14 years. Our collaborations with Rasmus Bro started the next year in 2002 and continue today. Initially focused on multi-way methods, Rasmus has had a major impact on our software from numerical underpinnings to usability. Our Chemometrics without Equations collaboration with Donald Dahlberg started in 2002 and has been taught at EAS for 18 consecutive years now.

We’ve had tremendously good fortune to work with talented and dedicated scientists and engineers. This includes our current technical staff (in order of seniority) R. Scott Koch, Robert T. “Bob” Roginski, Donal O’Sullivan, Manny Palacios and Lyle Lawrence. We wouldn’t trade you EigenGuys for anybody! Also past staff members of note including Charles E. “Chuck” Miller, Randy Bishop and Willem Windig.

So what’s next? The short answer: more of the same! It’s both a blessing and a curse that the list of additions and improvements that we’d like to make to our software is never ending. We’ll work on that while we continue to provide the outstanding level of support our users have come to expect. Our training efforts will continue with our live courses but we also plan more training via webinar and in other venues. And of course we’re still doing consulting work and look forward to new and interesting projects in 2020.

In closing, we’d like to thank all the great people that we’ve worked with these 25 years. This includes our staff members past and present, our consulting clients, academic colleagues, technology partners, short course students and especially the many thousands of users of our PLS_Toolbox software, its Solo derivatives and add-ons. We’ve had a blast and we look forward to continuing to serve our clients in the new decade!

Happy New Year!

BMW

SEARCH

SEARCH